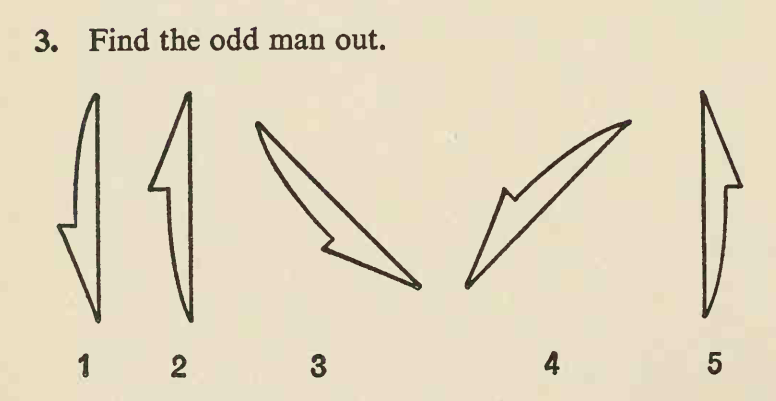

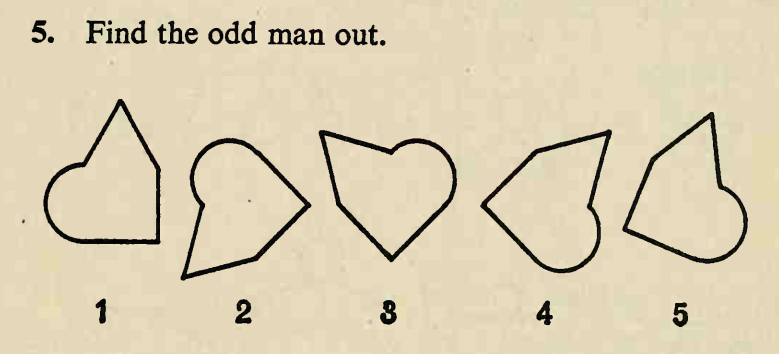

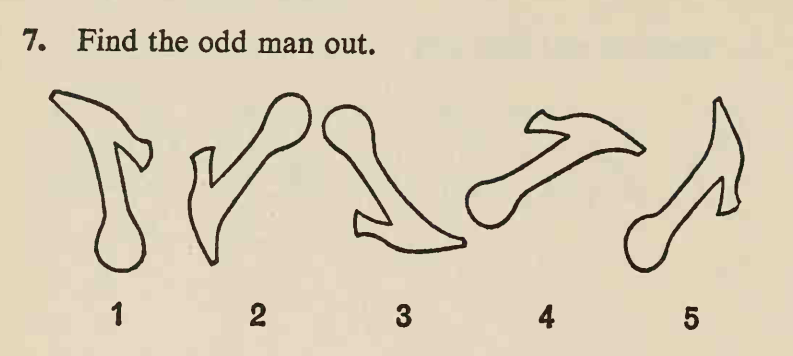

我参加了一次在线视觉智商测试,里面很多问题都是这样的:

这些图像的地址是:

[f"https://www.idrlabs.com/static/i/eysenck-iq/en/{i}.png" for i in range(1, 51)]

在这些图像中,有几个形状几乎相同,大小几乎相同.这些形状中的大多数都可以通过旋转和平移从其他形状中获得,但只有一种形状只能通过反射从其他形状中获得,这种形状具有与其他形状不同的手性,它是"奇数人".任务就是找到它.

这里的答案分别是2、1和4.我想把它自动化.

我差一点就成功了.

首先,我下载图像,并使用cv2加载它.

然后,我对图像进行阈值处理并反转数值,然后找出轮廓.然后我找出最大的等高线.

现在我需要提取与轮廓相关联的形状,并使形状直立.这就是我坚持的地方,我几乎成功了,但也有一些边缘情况.

我的 idea 很简单,找到轮廓的最小面积边界框,然后旋转图像使矩形竖直(所有边都平行于网格线,最长的边垂直),然后计算矩形的新坐标,最后使用数组切片提取形状.

我已经实现了我所描述的:

import cv2

import requests

import numpy as np

img = cv2.imdecode(

np.asarray(

bytearray(

requests.get(

"https://www.idrlabs.com/static/i/eysenck-iq/en/5.png"

).content,

),

dtype=np.uint8,

),

-1,

)

def get_contours(image):

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

_, thresh = cv2.threshold(gray, 128, 255, 0)

thresh = ~thresh

contours, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

return contours

def find_largest_areas(contours):

areas = [cv2.contourArea(contour) for contour in contours]

area_ranks = [(area, i) for i, area in enumerate(areas)]

area_ranks.sort(key=lambda x: -x[0])

for i in range(1, len(area_ranks)):

avg = sum(e[0] for e in area_ranks[:i]) / i

if area_ranks[i][0] < avg * 0.95:

break

return {e[1] for e in area_ranks[:i]}

def find_largest_shapes(image):

contours = get_contours(image)

area_ranks = find_largest_areas(contours)

contours = [e for i, e in enumerate(contours) if i in area_ranks]

rectangles = [cv2.minAreaRect(contour) for contour in contours]

rectangles.sort(key=lambda x: x[0])

return rectangles

def rotate_image(image, angle):

size_reverse = np.array(image.shape[1::-1])

M = cv2.getRotationMatrix2D(tuple(size_reverse / 2.0), angle, 1.0)

MM = np.absolute(M[:, :2])

size_new = MM @ size_reverse

M[:, -1] += (size_new - size_reverse) / 2.0

return cv2.warpAffine(image, M, tuple(size_new.astype(int)))

def int_sort(arr):

return np.sort(np.intp(np.floor(arr + 0.5)))

RADIANS = {}

def rotate(x, y, angle):

if pair := RADIANS.get(angle):

cosa, sina = pair

else:

a = angle / 180 * np.pi

cosa, sina = np.cos(a), np.sin(a)

RADIANS[angle] = (cosa, sina)

return x * cosa - y * sina, y * cosa + x * sina

def new_border(x, y, angle):

nx, ny = rotate(x, y, angle)

nx = int_sort(nx)

ny = int_sort(ny)

return nx[3] - nx[0], ny[3] - ny[0]

def coords_to_pixels(x, y, w, h):

cx, cy = w / 2, h / 2

nx, ny = x + cx, cy - y

nx, ny = int_sort(nx), int_sort(ny)

a, b = nx[0], ny[0]

return a, b, nx[3] - a, ny[3] - b

def new_contour_bounds(pixels, w, h, angle):

cx, cy = w / 2, h / 2

x = np.array([-cx, cx, cx, -cx])

y = np.array([cy, cy, -cy, -cy])

nw, nh = new_border(x, y, angle)

bx, by = pixels[..., 0] - cx, cy - pixels[..., 1]

nx, ny = rotate(bx, by, angle)

return coords_to_pixels(nx, ny, nw, nh)

def extract_shape(rectangle, image):

box = np.intp(np.floor(cv2.boxPoints(rectangle) + 0.5))

h, w = image.shape[:2]

angle = -rectangle[2]

x, y, dx, dy = new_contour_bounds(box, w, h, angle)

image = rotate_image(image, angle)

shape = image[y : y + dy, x : x + dx]

sh, sw = shape.shape[:2]

if sh < sw:

shape = np.rot90(shape)

return shape

rectangles = find_largest_shapes(img)

for rectangle in rectangles:

shape = extract_shape(rectangle, img)

cv2.imshow("", shape)

cv2.waitKeyEx(0)

但它并不是完美的:

正如你所看到的,它包括了边界矩形中的所有东西,而不仅仅是由轮廓限制的主形状,还有一些额外的部分突出.我希望形状只包含由轮廓限制的区域.

然后,更严重的问题是,不知何故,边界框并不总是与轮廓的主轴对齐,就像你在最后一张图像中看到的那样,它没有竖直,并且有黑色区域.

如何解决这些问题?